Despite knowing that errors in testing can lead to serious patient harm, too many clinical laboratories are performing only the minimum amount of quality control (QC) required by regulation and recommended by manufacturers, leading some in the industry to call for labs to adopt more robust statistical quality control (SQC) approaches designed to focus on patient risk.

A recent study of current SQC practices in U.S. laboratories found that 21 leading academic laboratories surveyed typically employ two standard deviation (SD) control limits in spite of their known high false rejection rate. It also found that labs generally use a minimum number of control measurements per run (two) and often perform the minimum frequency of SQC, explained James Westgard, PhD, founder of Westgard QC (Am J Clin Pathol 2018;150:96-104). “Based on this survey, it appears that current QC practices are based on mere compliance to CLIA minimums, rather than the best practices for patient care,” Westgard said.

CLIA requires laboratories to have QC procedures in place to monitor the accuracy and precision of the complete testing process. Under CLIA, labs must perform at least two levels of external controls on each test system for each day of testing and follow all specialty/subspecialty requirements in the CLIA regulations for nonwaived tests.

To minimize QC when performing tests for which manufacturers’ recommendations are less than those required by CLIA (such as once per month), the Centers for Medicare and Medicaid Services (CMS) has provided guidance to labs on how to develop an individualized quality control plan (IQCP) that involves performing a risk assessment of potential sources of error in all phases of testing and putting in place a QC plan to reduce the likelihood of errors.

However, developing an IQCP is voluntary and many labs choose not to adopt such a plan, instead opting for the CLIA requirement of two QC levels each day. “For comparison of best practices, the [Clinical and Laboratory Standards Institute] CLSI C24-Ed4 guideline for statistical QC recommends that SQC strategies be based on the quality required for intended use,” Westgard noted. “Typically that is defined as ‘allowable total error,’ the observed imprecision and bias of the measurement procedure, the rejection characteristics of the SQC procedure, and the risk of harm from undetected errors, such as those based on Curtis Parvin’s patient risk model.”

The Patient in Focus

Curtis Parvin, PhD, a longtime leader in clinical laboratory QC, has been instrumental in shifting the focus of QC to place more emphasis on how failures might actually affect patient care. His MaxE(Nuf) patient risk model predicts the maximum expected increase in the number of erroneous patient results reported and acted on when an out-of-control condition occurs in a measurement procedure given a laboratory’s QC strategy.

That emphasis on patients helped inform the latest version of CLSI’s “Statistical Quality Control for Quantitative Measurement Procedures,” C24-Ed4 guideline referenced by Westgard, which was last updated in 2016. The fourth edition is now more closely aligned with the patient-risk-focused approach used in another CLSI guideline, EP23, “Laboratory Quality Control Based on Risk Management,” explained Parvin, who chaired the C24 update committee when he worked at BioRad. Parvin is now a consultant.

“EP23 defines patient risk as the combination of the probability of occurrence of patient harm and the severity of that harm,” Parvin said. “The higher the expected severity of harm to the patient, the lower the probability of occurrence has to be in order for the risk to be acceptable.”

C24-Ed4 uses more patient-risk focused language and updates a number of performance metrics, noted Parvin. “Instead of talking about the probability of a rule rejection for an instrument, you’re talking about the expected number of erroneous patient results reported because of an undetected out-of-control condition,” he emphasized. “The focus is on the potential for patient harm.”

Testing Volume and QC Frequency

As QC in clinical laboratories evolves, a greater emphasis is being placed on QC frequency and QC schedules as a critical part of an overall strategy. The higher the volume of testing, the more labs may need to run QC in order to minimize patient risk.

“Ideally, a technologist would be able to program an instrument to run QC at a certain frequency, such as every 50th sodium test,” Parvin said. “A lot of instruments today can’t do that, but a tech can schedule QC based on the number of sodium tests typically done each day.”

Volume is one part of the equation, but medical risk and cost must also be considered, added Robert Schmidt, MD, PhD, MBA, medical director of quality optimization for ARUP Laboratories in Salt Lake City. “Ultimately, labs should come up with an equation that factors in the cost of running the overall system, the cost of bad results, and the cost of doing QC,” he noted. “There’s a trade-off among those things.”

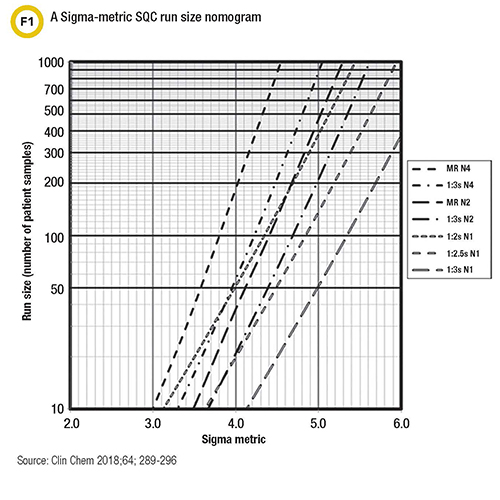

Westgard suggests labs make an objective assessment using Parvin’s risk model that places QC frequency in terms of run size or the number of patient samples between consecutive QC events. Graphic nomograms that relate a method’s analytical sigma-metric directly to the control rules, number of control measurements, and run size can be useful tools for laboratories, he said (Figure 1). These nomograms can guide labs in choosing how many patient specimens they reasonably can examine between QC evaluations to be assured that not too many erroneous patient results occur when a test is out of control.

“Rather than tackle the mathematics, labs can now use simple visual tools to determine appropriate QC frequencies for their methods,” Westgard said.

Closing the Bracket

Central Pennsylvania Alliance Laboratory, a specialty lab that performs about 1 million tests per year, has chosen to go beyond the bare minimum in performing QC. Jennifer Thebo, PhD, MT(ASCP), the lab’s director of technical operations and scientific affairs, said that technologists perform QC at the start of a shift and again at the end of a shift using three levels of control. This helps minimize delays in catching problems and ensures that the lab deals with an issue immediately.

The downfall of performing QC just once a day or once per shift is that when testing errors do appear, it’s impossible to know exactly when they started, Thebo noted. This means a technologist must go back and rerun previous tests to see if they were impacted by the out-of-control condition. For example, imagine one technologist runs two or three levels of control on Monday morning and all are within ranges, Thebo said. Testing continues throughout the next 24 hours. Then a second technologist runs QC on Tuesday morning and finds that results are not within ranges. The second technologist troubleshoots and fixes whatever the problem was, but it is unclear when the problem began, and it’s often assumed that the problem was created by performing daily maintenance. Too often, technologists do not perform a lookback to the previous day, which means that some test results that were reported may not be accurate.

“Running QC both at the start of a shift and at the end of a shift closes the bracket and saves everyone a lot of headache,” Thebo said. “While this is an example of QC per shift, if the testing volume for a particular test is high, QC may need to be performed at more frequent intervals in order to minimize retesting and corrections.”

C24-Ed4 recommends the practice of bracketed QC for continuous measurement processes and goes a step further in recommending that the reporting of patient test results be based on two QC events occurring before and after bracketing a group of patient samples, Westgard noted. The number of patient samples between QC events, or run size, should be optimized on the basis of the risk of harm if erroneous results are reported, he said, adding that this can be done by following the road map for planning SQC strategies outlined in the guideline.

Going Beyond Good Enough

Why don’t more laboratories go beyond the minimum when it comes to QC? In many cases, it comes down to lack of knowledge or lack of resources, according to Parvin. “I believe that most labs want to do good quality work and believe they are doing good quality work,” he said. “They are assuming that the guidance provided by the manufacturer is good enough. But if they’re doing the minimum, it’s almost guaranteed that for some labs it’s not enough. The whole focus of QC design in recent years is that one size fits all just doesn’t work.”

Thebo, who also performs inspections for the College of American Pathologists (CAP), agrees. “I think most labs are at least minimally performing QC but often they are not doing lookbacks to the previous day when a problem is identified,” she said. “A lot of labs are simply understaffed, and they struggle with doing more than the minimum.”

Part of the challenge in improving QC is changing perceptions about QC, Schmidt said. “The way QC is viewed in the laboratory is very different than how QC is viewed outside the lab,” he explained. “QC in other industries focuses more on using QC data for quality improvement while in labs it’s much more about compliance. There’s a tendency to think, ‘If it’s good enough for CAP or CLIA, it’s good enough.’ We need to go beyond good enough.”

Westgard believes better education and training on QC are needed and urges laboratorians to assess their knowledge by asking themselves a few questions: Have I read C24-Ed4? Do I understand what is recommended as best practices? Have I implemented a planning process following the C24-Ed4 road map? Do I have the necessary tools to support applications in my laboratory?

Laboratorians who answered no to any of the questions above lack the knowledge and capabilities to provide appropriate QC and deliver test results that are safe for their patients, Westgard said. He advises taking proactive steps to remedy this deficit. In the end, good QC is all about delivering the best patient care possible.

Kimberly Scott is a freelance writer who lives in Lewes, Delaware. +Email: [email protected]