Delta checks are an essential quality control tool for clinical laboratories. Their role has evolved over time, with labs continuing to refine how they use and set parameters for these important metrics in detecting patient-specific test result variances.

First described in the 1970s and first proposed by Ladenson as a system of quality control based on computer detection of changes in individual patient test results, the delta check procedure compares the current result(s) to the previous result(s) from the same individual using a specified cutoff value. That specified cutoff value represents the maximal allowable change that is acceptable per laboratory procedure. The cutoff value may be presented as an absolute change, percent change, rate of change, or rate of percent change.

Furthermore, the change may be classified as directional delta and considered to increase or decrease over time. A change that exceeds the cutoff value triggers a delta check flag that prompts further investigation of test results for that particular analyte. This procedure typically applies to measurements for several analytes on a panel. Once a lab detects an inaccuracy, rejection and recollection is the most suitable approach to manage the entire specimen.

The delta check process was introduced as a quality control method to detect misidentified specimens. But with the rise in patient wristbands, barcode scanning, and improved patient identification, the frequency of mislabeled cups or tubes has drastically decreased in recent years. Therefore, many institutions now are revising or questioning their delta check parameters.

The process of automated as opposed to manual delta checking became more useful with the rise in autoverification of results. Here, the result comparison with historical results became just another layer of automated quality control. The most common true positive delta check flags result from inappropriate specimen draws due to short specimen, specimen contamination with intravenous (IV) fluid, or incorrect tube type due to wrong anticoagulant. Many—especially critically ill—patients receive various kinds of IV fluids through a central line.

While nursing protocols advise collecting blood from the opposite arm, this is not always possible. Oftentimes a severely ill patient proves to be a hard stick or his or her opposite arm is not accessible. So, if the port of the central line is used as an alternative then it should be flushed generously before blood collection. Even invisible contamination with IV fluid has the potential to drastically alter electrolyte analysis. Delta checks alert technologists to this problem, the best remedy for which is to recollect the specimen(s).

Finding the Sweet Spot

The key question that remains is, how do we best pick up specimen inaccuracies without an overwhelming number of false-positive delta check flags? Historically, labs have derived delta check cutoffs from the scientific literature, but these are rather generic and need to be tweaked over time. Cutoffs developed from the literature don’t discriminate between the patient location (emergency department, renal or regular medical unit, etc.), disease state, or patient population. Patients receiving dialysis are especially prone to drastic changes in blood urea nitrogen, creatinine, and potassium levels.

Yet exactly these analytes are typically part of a delta check process. In addition, literature data doesn’t necessarily reflect the patient population of any specific laboratory. This means that labs might choose too tight or too wide cutoff values, making error detection less than optimal.

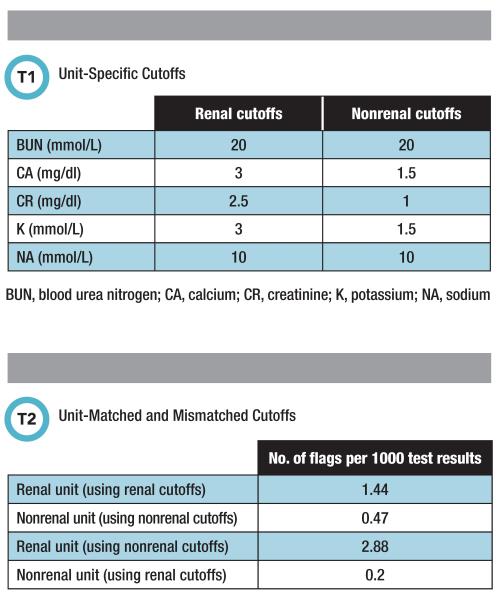

To further optimize the entire delta check process, labs might consider using their own historical patient data. With a patient data export from the laboratory information system, labs will be able to test various analyte cutoffs on this sample data set. This offers the advantage of selecting cutoffs prior to implementing them. This approach also enables a highly individualized delta check process based on a laboratory’s needs. Table 1 reflects our experience at Santa Clara Valley Medical Center in using this method to establish unit-specific cutoff values.

To highlight the effect of different cutoffs for different units, we matched and mismatched unit- and renal- and nonrenal-specific cutoffs, respectively. Table 2 illustrates how this remix affected the number of delta check flags per 1,000 test results. We found that using for nonrenal units the much tighter cutoff from renal units resulted in twice as many flags for renal unit patients.

On the other hand, using for nonrenal units the renal units’ wider cutoff potentially would lack sensitivity and miss specimen inaccuracies. Unfortunately, to date no automated middleware tools suggest delta check cutoffs based on a laboratory’s own patient population.

One resource that might help labs improve their current delta check process or learn more about selecting analytes for delta checks is the Clinical Laboratory Standards Institute’s EP33 document “Use of Delta Checks in the Medical Laboratory,” released in 2016. As labs strive to achieve ever tighter performance standards, delta checks will remain an indispensable tool.

Thomas Kampfrath, PhD, DABCC, is a clinical biochemist at Santa Clara Valley Medical in San Jose, California.+Email: thomas.kampfrath@hhs.sccgov.org